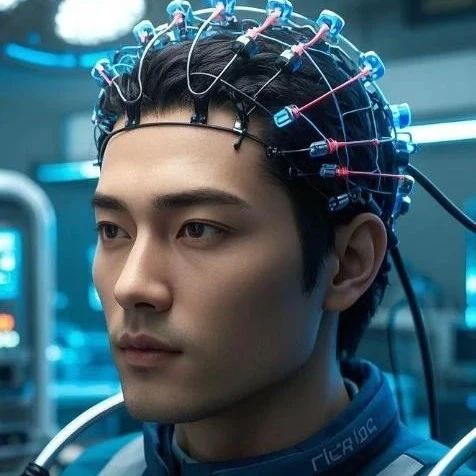

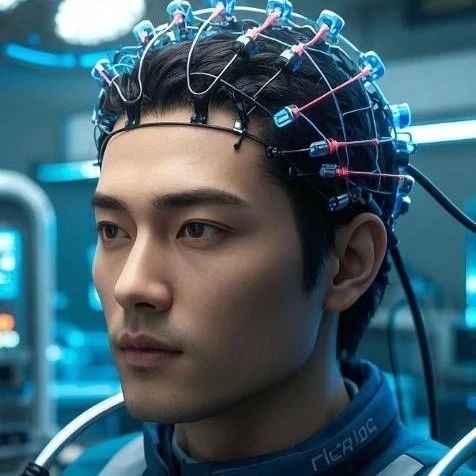

脑机接口技术手册即将上线,欢迎关注!

由姬械机科技技术委员会主编的The Handbook of BCI (脑机接口技术手册)即将上线发布,欢迎脑机接口技术与研究人员关注订阅指导!

The Handbook of BCI

该手册立项于2024年7月初,定位为脑机接口技术开发者与专业研究者提供基础技术流程与技术信息查阅与学习。在历时一年后于2025年7月初终于完成了初稿的编写,当前完成了英文版的编写,正在进行出版准备工作,后续将陆续完成中文版翻译。该手册共9章,约20万字,涵盖了脑机接口全栈技术的各个技术要素。让我们提前了解一下这边脑机接口行业技术工具书吧!

各章节简介:

CHAPTER 1: INTRODUCTION TO BRAIN-COMPUTER INTERFACES

This chapter has laid the groundwork for our exploration of Brain-Computer Interface (BCI) technology. We've journeyed from the initial conceptual sparks in the latter half of the 20th century to the vibrant and rapidly evolving field we see today. The core concept of a BCI remains constant: a system that measures signals from the brain and translates them into commands that can control a computer or other device. This creates a direct communication pathway from human intent to external action, bypassing the traditional routes of muscles and nerves.

A central theme that has emerged is the fundamental trade-off between invasiveness and signal quality. This is a critical consideration in the design and application of any BCI system.

The BCI Spectrum: A Recap

We've categorized BCI systems along a spectrum of invasiveness, each with its own distinct advantages and limitations:

Invasive BCIs represent the most direct approach to interfacing with the brain. By surgically implanting electrode arrays into the brain tissue, these systems can record the activity of individual neurons or small neuronal populations with exceptional detail and fidelity. This high signal-to-noise ratio allows for a level of control and precision that is currently unmatched by other methods. However, the significant surgical risks, potential for tissue damage, and long-term stability challenges are major considerations.

Non-invasive BCIs, on the other hand, offer a completely safe and accessible means of recording brain activity from the scalp. Techniques like electroencephalography (EEG) are widely used due to their ease of use and relatively low cost. The trade-off, however, is a significantly lower signal quality. The skull, scalp, and other tissues act as a filter, smearing the electrical signals and making it more challenging to discern the user's intent with high precision.

Bridging the gap between these two extremes are semi-invasive BCIs. These systems, such as electrocorticography (ECoG), place electrodes on the surface of the brain, beneath the skull but outside the brain tissue itself. This approach provides a better signal quality than non-invasive methods while avoiding some of the risks associated with penetrating the brain.

Looking Ahead: Building on the Foundation

The concepts introduced in this chapter are the essential building blocks for understanding the intricacies of BCI technology. The subsequent chapters will delve deeper into the specific domains that make these systems possible:

Neuroscience: We will explore the neural phenomena that BCIs leverage, from the firing of single neurons to the rhythmic oscillations of large brain regions. Understanding the language of the brain is paramount to designing effective BCI systems.

Hardware: The next section will examine the engineering behind the sensors and devices used to acquire brain signals. We will look at the design of both invasive and non-invasive electrodes, amplifiers, and the associated hardware for signal processing.

Software: Once brain signals are acquired, they must be translated into commands. We will investigate the machine learning algorithms and signal processing techniques that are the workhorses of modern BCIs, enabling the decoding of user intent from complex neural data.

Applications: Finally, we will explore the diverse and growing range of applications for BCI technology. From restoring communication and motor function for individuals with severe disabilities to a future of enhanced human-computer interaction and neuro-gaming, we will see how the foundational principles of BCI are being translated into real-world impact.

By understanding the history, the core concepts, and the fundamental trade-offs outlined here, you are now equipped to embark on a deeper exploration of the fascinating and transformative field of Brain-Computer Interfaces.

CHAPTER 2: THE NEUROLOGICAL BASIS OF BCI

This chapter shows the journey of neural decoding, from its conceptual origins to its modern implementations, reflects a remarkable progression in our ability to interpret the language of the brain. This chapter has traced that path, beginning with the elegant simplicity of the Population Vector Algorithm, which established the principle of population coding. We then saw the critical evolution to dynamic, probabilistic models like the Kalman filter, which introduced the importance of temporal modeling and Bayesian priors for robust, continuous control. Finally, we surveyed the current landscape, dominated by the rise of machine learning and deep learning, which offer unparalleled flexibility and performance by learning complex, non-linear representations directly from data.

Despite this progress, several fundamental challenges remain at the forefront of BCI research and development.

Robustness and Stability: A persistent obstacle for the clinical translation of BCIs is the degradation of decoder performance over time. Neural recordings are non-stationary; the set of recorded neurons can change, and their tuning properties can shift due to learning and plasticity. This necessitates frequent recalibration, which is burdensome for the user. A key goal is the development of adaptive decoders that can update themselves automatically or, ideally, decoding algorithms that are inherently robust to these signal instabilities.21

The Data Bottleneck: The performance of modern data-hungry algorithms, especially deep neural networks, is often limited by the quantity and quality of available training data. Acquiring large, well-labeled neural datasets, particularly from human clinical trial participants, is an expensive, time-consuming, and logistically complex process.2

Generalizability: A decoder trained for a specific subject, on a specific day, for a specific task often fails to generalize to new contexts. Creating decoders that are robust across subjects, sessions, and tasks is a major hurdle for widespread practical application.17

Looking ahead, the field is charting several exciting paths to address these challenges and push the boundaries of what is possible.

Unification of Encoding and Decoding: The development of models that are trained to be proficient at both predicting neural activity (encoding) and predicting behavior (decoding) represents a promising direction. By forcing a model to learn the bidirectional relationship between brain and behavior, researchers can instill stronger, more principled constraints, leading to more robust and generalizable representations.17

Interpretability and Explainable AI (XAI): As decoders become more complex and less transparent, a critical frontier is the development of methods to "look inside the black box." Techniques that can visualize the features learned by a deep network or identify the specific neurons most critical for its decisions are essential for bridging the gap between high-performance engineering and fundamental scientific insight. This will allow us to not only build BCIs that work, but to understand why they work.8

Neuroscientific Foundation Models: Perhaps the most ambitious future direction is the convergence of neural decoding with the foundation model paradigm that has revolutionized artificial intelligence. The goal is to pre-train enormous neural networks on massive, aggregated datasets from many subjects, tasks, and brain areas. Such a "BrainNet" would learn a general-purpose representation of neural activity. This pre-trained model could then be rapidly fine-tuned for a new user or a new task with a minimal amount of specific data, directly addressing the challenges of data scarcity and generalization.17 This approach shifts the goal from building bespoke decoders for individuals to creating a universal translator for the language of the brain.

The principles of neural decoding are the engine that drives BCI technology. As these principles continue to evolve, powered by advances in neuroscience, engineering, and artificial intelligence, they bring us ever closer to a future where the boundary between thought and action can be seamlessly bridged.

CHAPTER 3: ELECTRODE TECHNOLOGY FOR BCI

This chapter focus on the practical application of BCI electrodes and reveals a field pushing technological limits on two distinct fronts. For invasive interfaces, the challenge is to master the surgical implantation and mitigate the brain's inevitable biological response to a foreign object. Success depends on developing less traumatic insertion techniques and more biocompatible materials to achieve a stable, chronic interface. For non-invasive systems, the challenge is to engineer a device that can escape the laboratory and function reliably in the complexities of daily life. This requires solving the trilemma of signal quality, user comfort, and motion robustness. Across both domains, a convergent trend is clear: the future of BCI lies in creating softer, more flexible, and more intelligent interfaces that can seamlessly and durably integrate with the human body.

CHAPTER 4: BCI CIRCUITS AND SYSTEMS

This chapter present the path to creating a durable, high-performance, and safe implantable brain-computer interface is paved with profound engineering challenges that span the full spectrum of manufacturing, from nanoscale semiconductor physics to macro-scale surgical implantation. This chapter has navigated this complex landscape, journeying from the fabrication of the core CMOS integrated circuit to the development of its vital protective packaging.

We have seen that the choice of CMOS technology provides the essential foundation, enabling the integration of sophisticated, low-power electronics directly at the neural interface and shattering the wiring bottlenecks that limited previous generations of probes. However, this reliance on standard semiconductor processes necessitates a complex and delicate series of post-CMOS fabrication steps to add the biocompatible materials and define the physical structures required for a functional probe. This interface between standard, high-volume manufacturing and custom, low-volume microfabrication represents a key technical and economic challenge for the field.

Ultimately, the long-term survival of these intricate devices is dictated by the quality of their packaging. The human body presents a relentlessly hostile environment, and only a truly hermetic seal can protect the electronics from its corrosive and biologically active nature. While rigid enclosures of titanium and ceramic, sealed with laser welding and brazing, represent the current gold standard for reliability, they introduce a fundamental mechanical mismatch with soft brain tissue. The frontier of packaging research is therefore focused on resolving this conflict through advanced flexible and thin-film encapsulation strategies. These multilayer composite systems, which architecturally combine the hermeticity of inorganic barriers with the compliance of organic polymers, represent the most promising path toward a future of mechanically compatible, chronic neural interfaces.

The journey is far from over. Overcoming the final hurdles of system integration, sterilization, and regulatory approval requires immense resources and a deep commitment to safety and reliability. The future of advanced BCI technology is not in the hands of any single discipline. It is inextricably linked to the continued, collaborative innovation of engineers and scientists dedicated to solving these fundamental challenges of creating a durable, reliable, and safe symbiotic interface between the digital world of electronics and the biological world of the human brain.

CHAPTER 5: BCI DATASETS AND SIGNAL PROCESSING

The methods described in this chapter represent the classical approach to BCI feature extraction, often referred to as "hand-crafted" or "feature engineering." This approach relies on domain expertise—a deep understanding of neurophysiology and signal processing—to design and select features that are believed to be relevant for a given task. While powerful and foundational, this process can be laborious and may overlook complex, non-obvious patterns in the data.

The future of BCI signal processing is increasingly pointing toward end-to-end learning, driven by advances in deep learning, particularly Convolutional Neural Networks (CNNs).53 CNNs and other deep architectures have the remarkable ability to learn relevant hierarchical features automatically, directly from raw or minimally preprocessed EEG data.5 Instead of a BCI designer manually specifying the calculation of band power or CSP filters, a deep learning model can learn the optimal series of convolutions and transformations that best discriminate between mental states. These models can simultaneously learn spatial, spectral, and temporal features, effectively integrating all three domains in a data-driven manner.

While these advanced methods hold immense promise and are already achieving state-of-the-art performance, they are not a panacea. They typically require very large datasets for training, are computationally intensive, and can be difficult to interpret, often being described as "black boxes."

Therefore, the classical methods detailed in this chapter remain indispensable. They form the bedrock of BCI knowledge, providing the fundamental concepts and the performance benchmarks against which all new methods must be compared. A thorough understanding of time-domain statistics, frequency-domain power, and spatial-domain filtering is essential for any student or researcher seeking to innovate in this exciting field, whether by refining classical techniques or by designing the next generation of intelligent, end-to-end BCI systems.

CHAPTER 6: ARTIFICIAL INTELLIGENCE FOR BCI

The journey of BCI technology from a laboratory curiosity to a practical, life-changing tool is fraught with challenges, the most formidable of which is the dynamic and non-stationary nature of the brain signals upon which it depends. This inherent variability undermines the stability of BCI models, necessitating frequent and burdensome recalibration, and has been a primary factor limiting the technology's real-world adoption. This chapter has dissected this core problem and explored the three principal algorithmic paradigms developed by the research community to create more robust, adaptive, and reliable BCI systems.

First, we examined how transfer learning and domain adaptation address the calibration burden by reusing knowledge. By aligning data distributions across sessions or subjects—using either sophisticated geometric transformations on Riemannian manifolds or end-to-end adversarial learning—these methods allow for the creation of effective models with significantly less subject-specific data, paving the way for "low-calibration" or even "zero-calibration" systems.

Second, we explored the shift toward systems that learn in real-time. Online adaptation allows a BCI to track and compensate for signal drift within a session. More profoundly, co-adaptive learning reframes the entire BCI interaction as a symbiotic partnership. It recognizes that both the user and the machine are adaptive agents that can learn from each other. This mutual learning process, often formalized using the principles of reinforcement learning, is not just an enhancement but a critical component for enabling proficient control, especially for users who struggle with conventional, static systems.

Finally, we underscored that progress in algorithmic design is meaningless without robust and valid evaluation. The subtle but critical pitfalls of data leakage and overfitting, often stemming from the misuse of standard cross-validation techniques on time-series data, can lead to dramatically inflated and misleading performance claims. Adhering to rigorous evaluation pipelines, such as leave-one-subject-out or leave-one-session-out cross-validation, is not a methodological nicety but a prerequisite for genuine scientific progress and the development of systems that will not fail when deployed.

These three paradigms—leveraging prior knowledge, adapting in real-time, and ensuring scientific validity—are not mutually exclusive. The future of practical BCI likely lies in their integration.80 A truly robust system may be initialized using a transfer learning model built from a large population database. It will then operate within a co-adaptive framework, engaging in a continuous, mutual learning dialogue with the user to refine and personalize its performance. And throughout its development, its efficacy will be benchmarked using rigorous, appropriate cross-validation schemes that provide an honest assessment of its generalization capabilities.

Ultimately, this evolution in algorithmic strategy reflects a deeper conceptual shift: from viewing BCI as a static problem of decoding a signal to a dynamic process of skill acquisition. The user is not a passive source of commands to be deciphered, but an active partner learning to master a new form of interaction. The ultimate goal of BCI engineering, therefore, is to design the machine-side of this partnership to be an effective, patient, and adaptive teacher and collaborator. By doing so, we can move closer to the vision of creating truly personalized BCIs that are robust, reliable, and seamlessly integrated into the lives of those who need them most.

CHAPTER 7: EDGE COMPUTING FOR BCI

The successful deployment of Brain-Computer Interfaces in real-world, ambulatory settings hinges on our ability to bridge the gap between the computational demands of powerful AI models and the severe resource constraints of edge hardware. This chapter has provided a detailed technical survey of the four pillars of AI model efficiency—Model Pruning, Quantization, Knowledge Distillation, and the design of Inherently Efficient Architectures. These techniques are not mutually exclusive; rather, they form a synergistic toolkit for the BCI engineer. The most effective solutions will likely employ a pipeline approach: starting with an efficient architecture by design, enhancing its robustness and generalization through knowledge distillation, and then applying hardware-specific pruning and quantization for final deployment optimization.95

Looking forward, the evolution of efficient AI for BCI is moving along two exciting trajectories. The first is the shift from static inference to dynamic, on-device adaptation. The second is the long-term vision of neuromorphic computing, which promises to align the principles of computation with the principles of the brain itself.

The Rise of On-Device Continual Learning

A fundamental challenge in BCI is the non-stationary nature of neural signals. Brain activity changes over time due to factors like learning, fatigue, and attention shifts.90 A model that is highly accurate today may see its performance degrade tomorrow. The traditional solution—recalibrating the system by collecting new labeled data—is cumbersome and disruptive to the user. The future of practical BCI lies in

on-device continual learning (CL), where the model can adapt to these changes incrementally and autonomously.19

This introduces a new dimension to the efficiency challenge: models must be efficient not only for inference but also for on-device training or fine-tuning. Techniques like knowledge distillation, which can facilitate rapid adaptation of a student model on an edge device guided by a powerful teacher in the cloud, will be crucial. Similarly, architectures optimized for low-power on-device learning, such as the Parallel Ultra-Low Power (PULP) platform, are demonstrating the feasibility of this vision, enabling wearable BMI systems that can adapt to inter-session changes with minimal energy consumption.19 This hybrid edge-cloud paradigm, where efficient on-device learning is periodically guided by more extensive computation in the cloud, represents a powerful strategy for creating BCI systems that are both efficient and perpetually adaptive.

The Neuromorphic Horizon: The Ultimate Brain-Inspired Solution

While the techniques discussed in this chapter are essential for optimizing conventional Artificial Neural Networks (ANNs), the long-term future of efficient AI for BCI may lie in a more radical paradigm shift: neuromorphic computing. Inspired directly by the architecture and function of the biological brain, neuromorphic systems represent a fundamental rethinking of computation.5

Instead of the dense, synchronous operations of ANNs, neuromorphic hardware and the Spiking Neural Networks (SNNs) that run on them are event-driven and sparse.14 Computation occurs only when a "spike"—a discrete event in time—is transmitted between neurons. This asynchronous, data-driven processing leads to a dramatic reduction in power consumption, potentially by orders of magnitude, making it an ideal match for the ultra-low-power requirements of BCI implants and long-duration wearables.5 The brain itself is the ultimate example of an efficient, resource-constrained computer, and neuromorphic engineering seeks to emulate its principles.

The convergence of BCI and neuromorphic computing represents a truly symbiotic future. It envisions systems where the computational model (the SNN) and the biological system it interfaces with (the brain) operate on similar principles of sparse, event-driven, and ultra-low-power processing. While significant research is still needed to mature SNN training algorithms and neuromorphic hardware, this path offers the tantalizing prospect of a seamless, efficient, and deeply integrated interface between mind and machine, fulfilling the ultimate promise of BCI technology. The journey towards this horizon will be built upon the foundational efficiency techniques detailed in this chapter, which are paving the way for the intelligent, deployable BCI systems of today and tomorrow.

CHAPTER 8: BCI INTERACTION DESIGN AND APPLICATIONS

This chapter shows the journey of Brain-Computer Interface technology from therapeutic aid to a potential tool of human augmentation. The ethical, legal, and societal challenges posed by this neuro-revolution are not mere technical hurdles to be overcome; they are profound quandaries that touch upon the very essence of what it means to be human. This chapter has sought to illuminate the core tensions that define this new frontier: the struggle to preserve mental privacy in an era of neural surveillance; the complex redefinition of agency and identity in a world of shared human-machine control; the looming specter of a neuro-stratified society divided by cognitive capability; and the double-edged sword of enhancement, which promises to elevate our potential while threatening to devalue our humanity.

The analysis reveals that our existing conceptual and regulatory toolkits are insufficient for the task at hand. The path forward cannot be one of technological determinism, where we passively accept the future that innovation delivers. Instead, it demands a proactive, deliberate, and deeply interdisciplinary effort to steer the development of BCI technology in a direction that serves the common good. This requires a multi-layered and adaptive governance approach—one that skillfully combines the force of law, the guidance of international standards, the scrutiny of ethical oversight, and the rigor of technical best practices.

Ultimately, the questions raised by BCI technology are too important to be left to scientists, engineers, and entrepreneurs alone. They demand a broad and inclusive societal deliberation, one that brings together policymakers, ethicists, legal scholars, social scientists, and, most importantly, the public. By fostering a culture of responsible innovation and engaging in an open and honest dialogue about the kind of future we wish to create, we can hope to navigate the complexities of the neuro-revolution and harness the immense power of this technology to enhance human flourishing for all, without sacrificing the values that we hold most dear.

CHAPTER 9: THE FUTURE OF BCI

We have journeyed from the solid ground of present-day engineering to the speculative peaks of a symbiotic future. We have seen the immense technological chasm that must be crossed—a chasm of bandwidth, biocompatibility, and artificial intelligence. We have contemplated the nature of the hybrid mind that might emerge, a mind that collapses the distinction between human and machine, individual and collective. We have confronted the profound implications for our evolution, our identity, and our very definition of humanity, and we have weighed the utopian promise against the dystopian peril.

The central conclusion of this exploration is that the future of brain-computer interfaces is not a predetermined technological outcome to be passively awaited. It is a series of choices that we must make, actively and consciously. The development of a true human-AI symbiosis represents a Promethean bargain of the highest order. We are reaching for the fire of the gods: the power to rewrite our own minds, to merge with our creations, and to take the reins of our own evolution. This power offers almost unimaginable benefits—the end of disease, the amplification of intelligence, the potential for a collective consciousness capable of solving the universe's greatest mysteries. But it carries with it existential risks—the erosion of value, the dissolution of the self, the creation of new and terrible forms of inequality and control.

This brings the challenge directly to you, the readers of this textbook—the next generation of scientists, engineers, clinicians, philosophers, and policymakers. Your task is not merely to build this technology, but to build it wisely. It is a task that cannot be accomplished within the silo of a single discipline. It demands a new and deep collaboration, one that weds technical brilliance with philosophical depth, computational power with ethical foresight, and ambitious innovation with profound humility. The future of the human mind is not yet written. You are charged with the awesome responsibility of architecting a future that enhances our humanity rather than one that erases it.

脑与智能技术探索

除此之外,姬械机科技技术委员会于2025年1月初启动了类脑智能方向的基础技术介绍英文版专著- The Exploration of Brain- based AI(脑与智能技术探索)的编写,预计将于2026年1月初完成初稿并启动出版发布相关工作。该书籍主要介绍关于脑与智能技术的相关知识与信息,并探讨大脑智能如何帮助打造新一代人工智能技术,其核心内容如下文所示。

The Exploration of Brain and Intelligence

Part I:The intelligence of Brain大脑智能

Chapter1.大脑的功能简介

The functions of brain

Chapter2.大脑的工作机制

The mechanism of brain

Chapter3.大脑的观测方案

The observation for brain

Chapter4.大脑的计算方法

The Computation of brain

Chapter5.大脑的建模分析

The Modeling of Brain

PartII .The Brain- Inspired intelligence脑启发智能

Chapter6.基于大脑机制的智能计算

Brain-inspired Intelligence Computing

Chapter7.基于大脑机制的行为计算

Brain-inspired Behavior Computing

Chapter8. 基于大脑机制的情感计算

Brain-inspired Emotional Computing

Chapter9.基于大脑机制的记忆解析

Brain-inspired Memory Analysis Com

Chapter10.基于大脑机制的复杂决策

Brain-inspired Complex Decision-Making

Chapter11.基于大脑机制的意识模拟

Brain-inspired Consciousness Simulation

PartIII . The Intelligence of Brain-Machine脑机智能

Chapter12.大脑意念控制与脑机交互

Mind Control and Brain Computer Interation

Chapter13.大脑机制驱动的类脑芯片

Brain Inspired Neuromorphic Chips

Chapter14.大脑运算启发的类脑计算

Brain-inspired Computing

Chapter15.大脑决策驱动的类脑智能

Brain-inspired Intelligence

PartIV: Brain and Intelligence 脑与智能

Chapter16.脑与智能的融合发展

The development of brain and intelligence

Chapter17. 脑与智能融合的技术应用

The application of brain and intelligence

Chapter18. 脑与智能未来

The future of brain and intelligence

诚邀合作,共促行业发展

敬请期待,同时也欢迎相关技术与研究人员进行合作进行这2本书籍的翻译工作,让我们共同为脑与智能行业发展添砖加瓦!

联系人:卢老师

邮箱:roylou@mashicinerobot.com

END

联系我们

商务合作

bp@maschinerobot.com

简历投递

hr@maschinerobot.com

关注智姬公众号

获取更多精彩内容