目前QT和ffmpeg都已经安装好了,接下来将会使用QT和ffmpeg来进行编程。

https://bbs.elecfans.com/jishu_2496310_1_1.html

前言

其实官方为我们已经提供了三个官方实例,我打开学习了一下,QT实例虽然也用到了信号槽,是点击按钮的信号槽,我觉的QT妙就妙在了信号槽和多线程,而且官方的是QT5;多路摄像头取流案例使用的是采流使用的是GStreamer,UI采用的是GTK库;所以我做了一个QT6+多线程+ffmpeg的多路摄像头取流案例供参考。

代码编写

test.pro 这个地方比较重要的改动主要在于添加ffmpeg要使用的库

QT += core guigreaterThan(QT_MAJOR_VERSION, 4): QT += widgetsCONFIG += c++17# You can make your code fail to compile if it uses deprecated APIs.# In order to do so, uncomment the following line.#DEFINES += QT_DISABLE_DEPRECATED_BEFORE=0x060000 # disables all the APIs deprecated before Qt 6.0.0SOURCES += \camera.cpp \main.cpp \mainwindow.cppHEADERS += \camera.h \mainwindow.hFORMS += \mainwindow.uiLIBS += -lavdevice \-lavformat \-ldrm \-lavfilter \-lavcodec \-lavutil \-lswresample \-lswscale \-lm \-lrga \-lpthread \-lrt \-lrockchip_mpp \-lz# Default rules for deployment.qnx: target.path = /tmp/$${TARGET}/binelse: unix:!android: target.path = /opt/$${TARGET}/bin!isEmpty(target.path): INSTALLS += target

// 显示画面的大小extern "C"{}class Camera : public QThread {Q_OBJECTpublic:Camera(QObject *parent = 0);virtual ~Camera();void set_para(int index, const char *rtsp_str);signals:void update_video_label(int, QImage *);protected:virtual void run();private:int index;char *rtsp_str = nullptr;uint8_t *dst_data_main[4];int dst_linesize_main[4];QImage *p_image_main = nullptr;};

Camera::Camera(QObject *parent) : QThread(parent) {// 创建显示图像的内存av_image_alloc(dst_data_main, dst_linesize_main, DISPLAY_WIDTH, DISPLAY_HEIGHT, AV_PIX_FMT_RGB24, 1);p_image_main = new QImage(dst_data_main[0], DISPLAY_WIDTH, DISPLAY_HEIGHT, QImage::Format_RGB888);}Camera::~Camera() {}void Camera::set_para(int index, const char *rtsp_str) {// 配置线程的参数this->index = index;this->rtsp_str = new char[255];strcpy(this->rtsp_str, rtsp_str);}void Camera::run() {// 线程执行函数int src_width, src_height;AVFormatContext *av_fmt_ctx = NULL;AVStream *av_stream = NULL;AVCodecContext* av_codec_ctx;// 设置RTSP连接参数AVDictionary *options = NULL;av_dict_set(&options, "rtsp_transport", "tcp", 0);av_dict_set(&options, "buffer_size", "1024000", 0);av_dict_set(&options, "stimeout", "2000000", 0);av_dict_set(&options, "max_delay", "500000", 0);// 创建AVFormatContextav_fmt_ctx = avformat_alloc_context();if (avformat_open_input(&av_fmt_ctx, rtsp_str, NULL, &options) != 0) {qDebug() << "Couldn't open input stream.\n";}if (avformat_find_stream_info(av_fmt_ctx, NULL) < 0) {qDebug() << "Couldn't find stream information.\n";}// 选取视频流for (int i = 0; i < av_fmt_ctx->nb_streams; i++) {if (av_fmt_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {av_stream = av_fmt_ctx->streams[i];break;}}if (av_stream == NULL) {qDebug() << "Couldn't find stream information.\n";}// 第一句是使用的ffmpeg默认的软编码,第二句是使用的rkmpp硬编码// const AVCodec *av_codec = avcodec_find_decoder(av_stream->codecpar->codec_id);const AVCodec *av_codec = avcodec_find_decoder_by_name("h264_rkmpp");if (av_codec == nullptr) {qDebug() << "Couldn't find decoder codec.\n";return;}// 创建CodecContextav_codec_ctx = avcodec_alloc_context3(av_codec);if (av_codec_ctx == nullptr) {qDebug() << "Couldn't find alloc codec context.\n";}avcodec_parameters_to_context(av_codec_ctx, av_stream->codecpar);// 打开解码器if (avcodec_open2(av_codec_ctx, av_codec, NULL) < 0) {qDebug() << "Could not open codec.\n";}// 在调试栏显示使用的解码器qDebug() << av_codec->long_name << " " << av_codec->name;src_width = av_codec_ctx->width;src_height = av_codec_ctx->height;// 创建AVFrame和AVPacket供解码使用AVFrame *av_frame = av_frame_alloc();AVPacket *av_packet = (AVPacket *)av_malloc(sizeof(AVPacket));// 创建SwsContext,将解码出来的YUV格式,转换成供界面显示的RGB格式,同时对图像进行缩放,缩放成显示大小struct SwsContext* img_ctx = sws_getContext(src_width, src_height, av_codec_ctx->pix_fmt, DISPLAY_WIDTH, DISPLAY_HEIGHT, AV_PIX_FMT_RGB24, SWS_BILINEAR, 0, 0, 0);while (true) {// 解码流程if (av_read_frame(av_fmt_ctx, av_packet) >= 0){avcodec_send_packet(av_codec_ctx, av_packet);while (avcodec_receive_frame(av_codec_ctx, av_frame) == 0) {// 转换画面sws_scale(img_ctx, (const uint8_t* const*)av_frame->data, av_frame->linesize, 0, src_height, dst_data_main, dst_linesize_main);// 发送信号,将QImage图像地址发送到主线程,供更新界面// 一般情况下,界面的更新都不允许在子线程进行// 同时,一些耗时长的操作也一般不允许在主线程进行,例如网络操作,IO操作以及解码等,防止主线程卡死,画面卡顿emit update_video_label(index, p_image_main);}av_frame_unref(av_frame);av_packet_unref(av_packet);} else {msleep(10);}}return;}

QT_BEGIN_NAMESPACEnamespace Ui { class MainWindow; }QT_END_NAMESPACEclass MainWindow : public QMainWindow{Q_OBJECTpublic:MainWindow(QWidget *parent = nullptr);~MainWindow();public slots:void update_video_label(int index, QImage *image);private slots:void on_pushButton_1_clicked();void on_pushButton_2_clicked();void on_pushButton_3_clicked();void on_pushButton_4_clicked();private:Ui::MainWindow *ui;Camera **camera = new Camera*[4];bool status[4] = {false};};

MainWindow::MainWindow(QWidget *parent) : QMainWindow(parent), ui(new Ui::MainWindow) {ui->setupUi(this);// 对采集线程进行初始化并绑定更新视图槽camera[0] = new Camera();camera[1] = new Camera();camera[2] = new Camera();camera[3] = new Camera();connect(camera[0], SIGNAL(update_video_label(int, QImage *)), this, SLOT(update_video_label(int, QImage *)));connect(camera[1], SIGNAL(update_video_label(int, QImage *)), this, SLOT(update_video_label(int, QImage *)));connect(camera[2], SIGNAL(update_video_label(int, QImage *)), this, SLOT(update_video_label(int, QImage *)));connect(camera[3], SIGNAL(update_video_label(int, QImage *)), this, SLOT(update_video_label(int, QImage *)));}MainWindow::~MainWindow() {delete ui;}void MainWindow::on_pushButton_1_clicked() {// 如果已经开始播放,那么就不必继续了,后期可以添加先停止原链接采集再开启新链接采集if (status[0]) {return;}status[0] = true;camera[0]->set_para(0, ui->lineEdit_1->text().toStdString().c_str());// 启动采集camera[0]->start();}void MainWindow::on_pushButton_2_clicked() {// 同button1的点击事件处理if (status[1]) {return;}status[1] = true;camera[1]->set_para(1, ui->lineEdit_2->text().toStdString().c_str());camera[1]->start();}void MainWindow::on_pushButton_3_clicked() {// 同button1的点击事件处理if (status[2]) {return;}status[2] = true;camera[2]->set_para(2, ui->lineEdit_3->text().toStdString().c_str());camera[2]->start();}void MainWindow::on_pushButton_4_clicked() {// 同button1的点击事件处理if (status[3]) {return;}status[3] = true;camera[3]->set_para(3, ui->lineEdit_4->text().toStdString().c_str());camera[3]->start();}void MainWindow::update_video_label(int code, QImage *image) {// 将采集回来的画面显示到对应的界面switch (code) {case 0:ui->label_video_1->setPixmap(QPixmap::fromImage(*image));break;case 1:ui->label_video_2->setPixmap(QPixmap::fromImage(*image));break;case 2:ui->label_video_3->setPixmap(QPixmap::fromImage(*image));break;case 3:ui->label_video_4->setPixmap(QPixmap::fromImage(*image));break;default:break;}}

还需要更改的地方

首先第一个更改的地方是吧QT编译的线程降低一些,线程太多容易搞死机,我这里改成了1,可以像我这样修改,就可以了。

./configure --prefix=/usr --enable-gpl --enable-version3 --enable-libdrm --enable-rkmpp --enable-rkrga --enable-sharedmake -j 6sudo make install

但是执行完毕后,依旧没有解决问题,直到花了一天的时间后,我再次使用ldd test的时候突然发现一个问题,明明我的安装路径是在/usr,理论上动态连接库应该是在/usr/lib当中,但是为何是在/lib/aarch64-linux-gnu/下面呢?于是我怀疑虽然没有安装ffmpeg,但是很有可能把ffmpeg的库已经安装上了,所以使用apt search ffmpeg搜索,然后就看到avformat avcodec avutil等库都已经安装好了,所以有时候不要想当然,当把该排除的都排除了,那可能就是最简单的原因,简单到压根都想不起来的程度,发现问题那就解决问题吧。

第一种解决办法,把自带的ffmpeg卸载掉,缺点是依赖比较多,卸载起来还是比较麻烦,但是强烈推荐。

sudo apt remove libavformat-dev libopencv-dev libgstreamer-plugins-bad1.0-dev libcheese-dev libcheese-gtk-dev libopencv-highgui-dev libopencv-contrib-dev libopencv-features2d-dev libopencv-objdetect-dev libopencv-calib3d-dev libopencv-stitching-dev libopencv-videostab-dev libavcodec-dev libavutil-dev libavdevice-dev libswresample-dev libswscale-dev libswresample4 libswscale6 libavutil57 libavformat59 libavcodec59 libchromaprint1 libfreerdp2-2 libopencv-videoio406 gstreamer1.0-plugins-bad libopencv-superres406 libopencv-videoio-dev libopencv-videostab406 libweston-10-0 gnome-video-effects gstreamer1.0-plugins-bad-apps gstreamer1.0-plugins-bad-dbgsym libcheese8 libgstrtspserver-1.0-0 libopencv-superres-dev libweston-10-0-dbgsym libweston-10-dev weston cheese gir1.2-cheese-3.0 gir1.2-gst-rtsp-server-1.0 gstreamer1.0-plugins-bad-apps-dbgsym libcheese-gtk25 libcheese8-dbgsym libgstrtspserver-1.0-dev weston-dbgsym cheese-dbgsym libcheese-gtk25-dbgsym首先,我们不能让qt编译时选择默认的include和lib路径,这也就是为什么在.pro中没有写路径也可以找到ffmpeg的头文件和库文件,我们需要添加路径,修改test.pro文件如下:

QT += core guigreaterThan(QT_MAJOR_VERSION, 4): QT += widgetsCONFIG += c++17# You can make your code fail to compile if it uses deprecated APIs.# In order to do so, uncomment the following line.#DEFINES += QT_DISABLE_DEPRECATED_BEFORE=0x060000 # disables all the APIs deprecated before Qt 6.0.0SOURCES += \camera.cpp \main.cpp \mainwindow.cppHEADERS += \camera.h \mainwindow.hFORMS += \mainwindow.uiINCLUDEPATH += /usr/includeLIBS += -L/usr/lib \-lavdevice \-lavformat \-ldrm \-lavfilter \-lavcodec \-lavutil \-lswresample \-lswscale \-lm \-lrga \-lpthread \-lrt \-lrockchip_mpp \-lz# Default rules for deployment.qnx: target.path = /tmp/$${TARGET}/binelse: unix:!android: target.path = /opt/$${TARGET}/bin!isEmpty(target.path): INSTALLS += target

LD_LIBRARY_PATH=/usr/lib ./test运行,对比及分析

首先我们尝试使用软解码来看看接入4路1080P 25FPS RTSP流,显示画面如下:

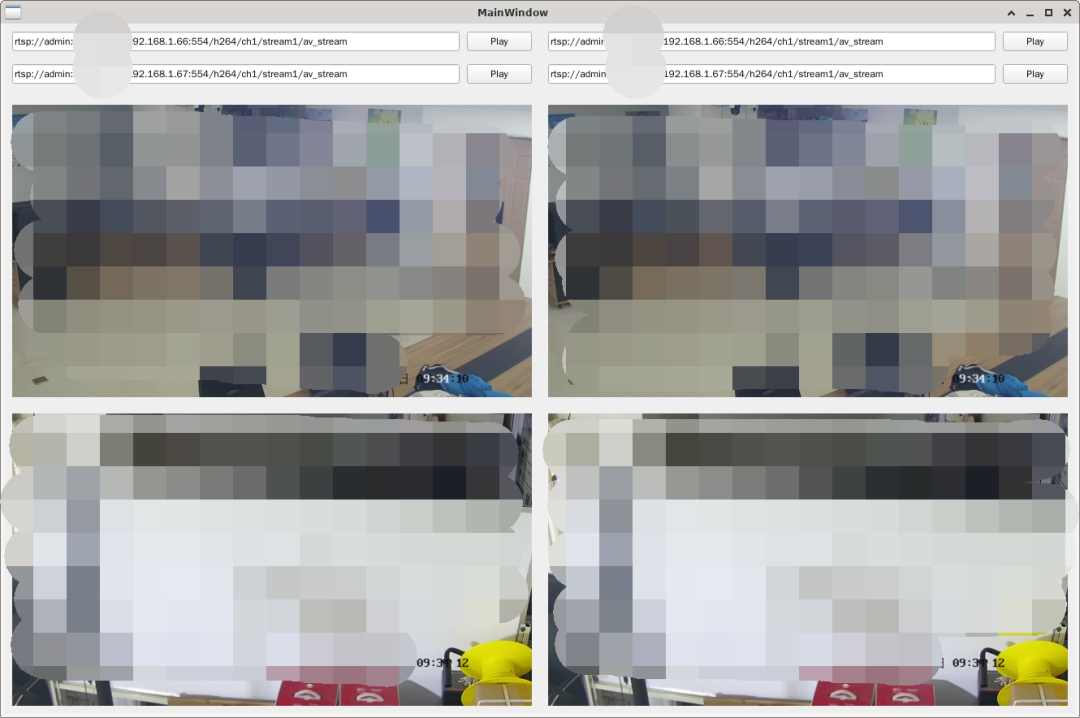

然后我们看看用硬解码接入相同的码流,甚至清晰度还要好过软解码:

CPU占用:

扫码加入资料分享群,定期分享硬件资料